My LLM-assisted journaling workflow

Journaling (writing in a private diary) has quietly become one of my most useful habits. Over time, I’ve been experimenting with ways to bring the newest tools from my professional work into that practice, and I eventually landed on the workflow below. It’s made journaling significantly more valuable for me — read on for why.

Before the workflow details, it’s worth asking: why journal at all?

Why journal?

For me, journaling is a tool for making progress. If something is bothering me or I’m stuck on a decision, journaling helps me refine raw thoughts and feelings into clear and actionable ideas. Once the thing is in written form, it becomes easier to:

- see the real shape of the problem (instead of whatever my brain is looping on)

- notice new directions to explore

- turn vague anxiety into concrete next steps

And it’s not just for personal things. Journaling is also great for working through technical ideas: teasing apart concepts, capturing constraints, and figuring out what to try next.

Key idea: optimize for low friction

When people think “journaling”, they often picture a notebook and a pen. I’ve done that, but my biggest problem was always friction: I handwrite a lot slower than I think, and the handwriting itself felt like an impediment to getting my thoughts out. Typing on my phone or computer was somewhat faster, but it was far too easy to get distracted by notifications, apps, etc.

The biggest step forward for me was dictation. Dictation feels conversational. It’s less intimidating than a blank page, and it’s much easier to dump a stream of consciousness without self-editing. I can be verbose and unstructured in the moment, and then clean it up later.

The main caveat: you need a quiet, private space where you can speak freely. That matters a lot if you’re journaling about anything personal.

The workflow (Apple Notes + MacWhisper + local LLM)

This workflow is very Apple-centric because I’m deep in that ecosystem.

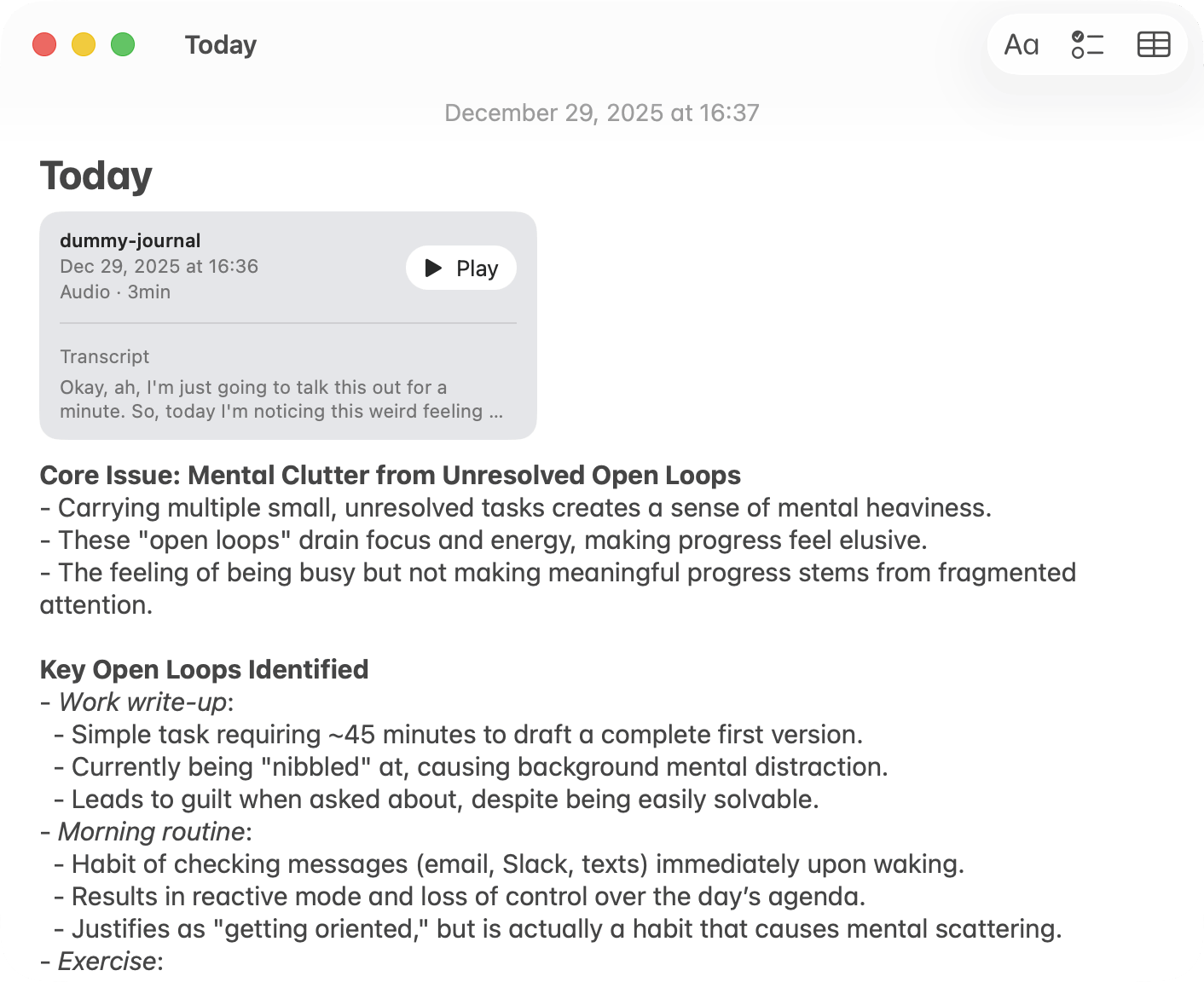

Step 1: dictate into Apple Notes

I use the Apple Notes app because it’s always there (iPhone + Mac) and sync is invisible. I don’t want to think about “where my journal lives” or whether today’s entry made it to the right device.

Here’s how I start an entry:

- Create a new note in a dedicated journaling folder

- Start an audio recording inside the note

- Speak stream-of-consciousness style

At this stage, the goal is raw material, not clarity. It’s totally fine if it’s meandering, repetitive, and peppered with filler words and pauses.

Apple Notes also does on-device transcription. It’s usually decent, and it’s immediately available, which makes it a nice first-pass transcript.

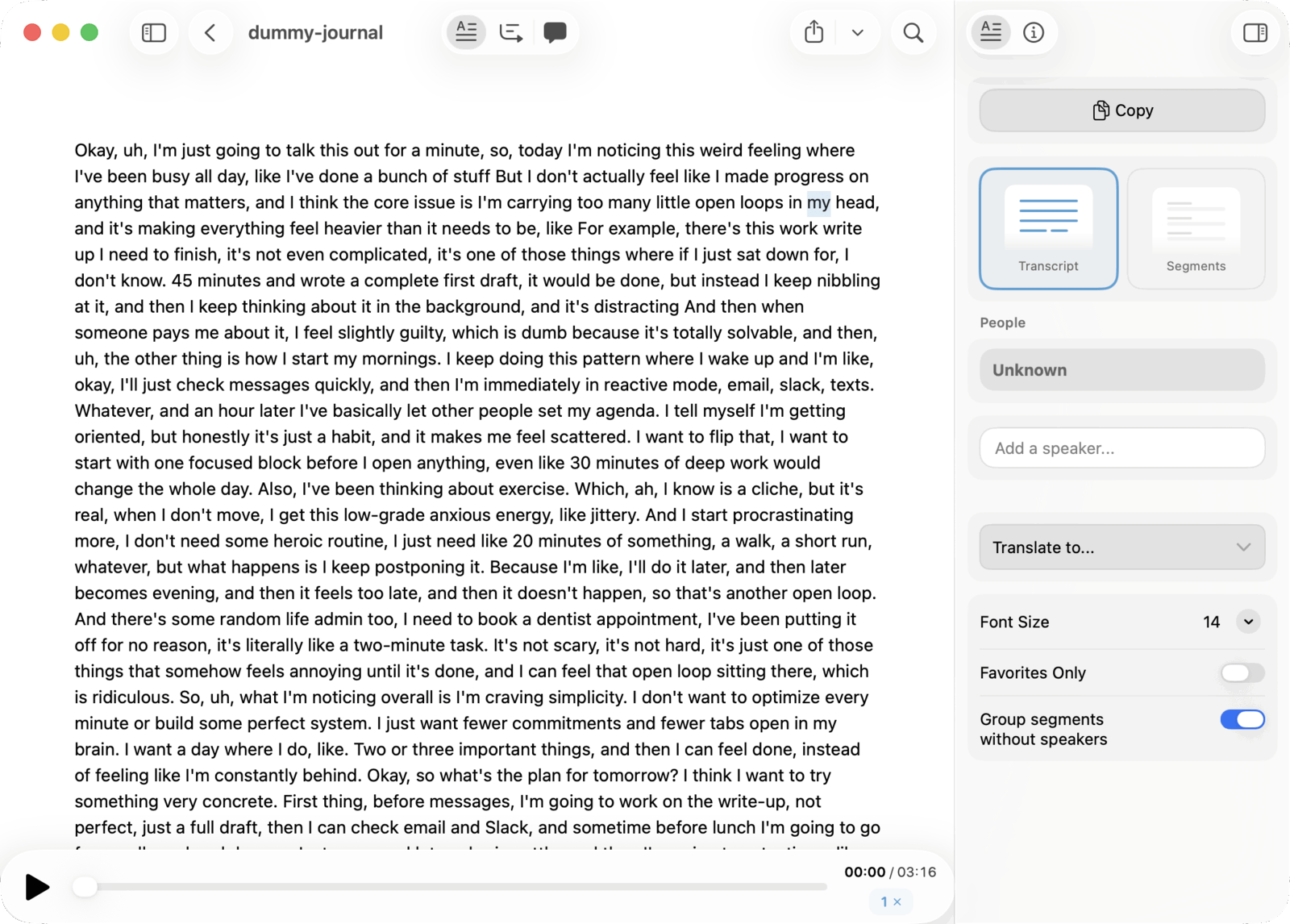

Step 2: run the recording through MacWhisper

Next I drag the audio file into MacWhisper, a macOS app for transcribing audio using state of the art models like OpenAI Whisper and Nvidia Parakeet.

In this workflow, I use it for its more accurate transcription than Apple Notes (especially with uncommon or domain-specific words) and its integrated one-click summary feature that saves me manually copy/pasting the transcript and corresponding prompt into LM Studio (see next section).

Step 3: summarize with a custom prompt (using a local model)

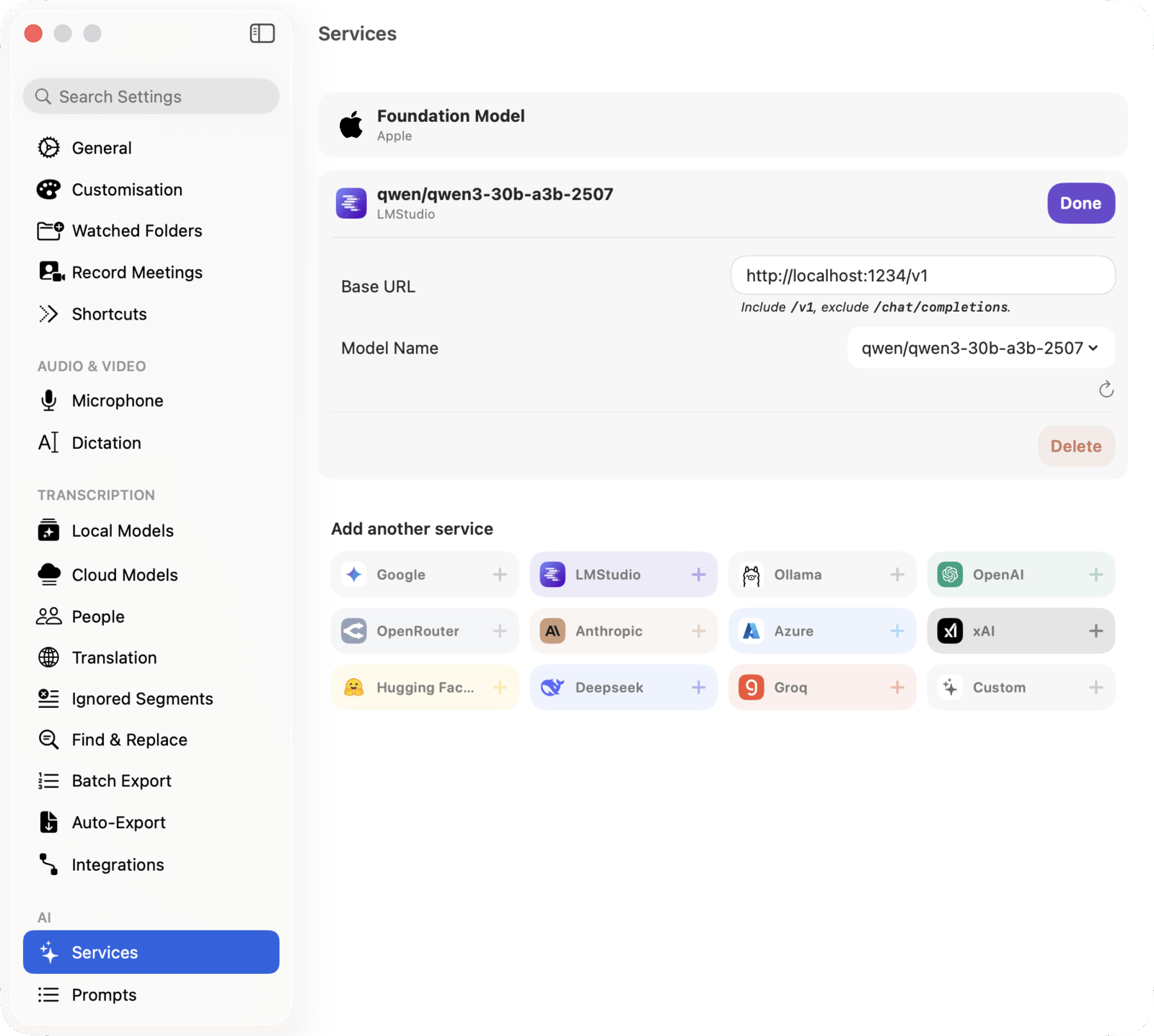

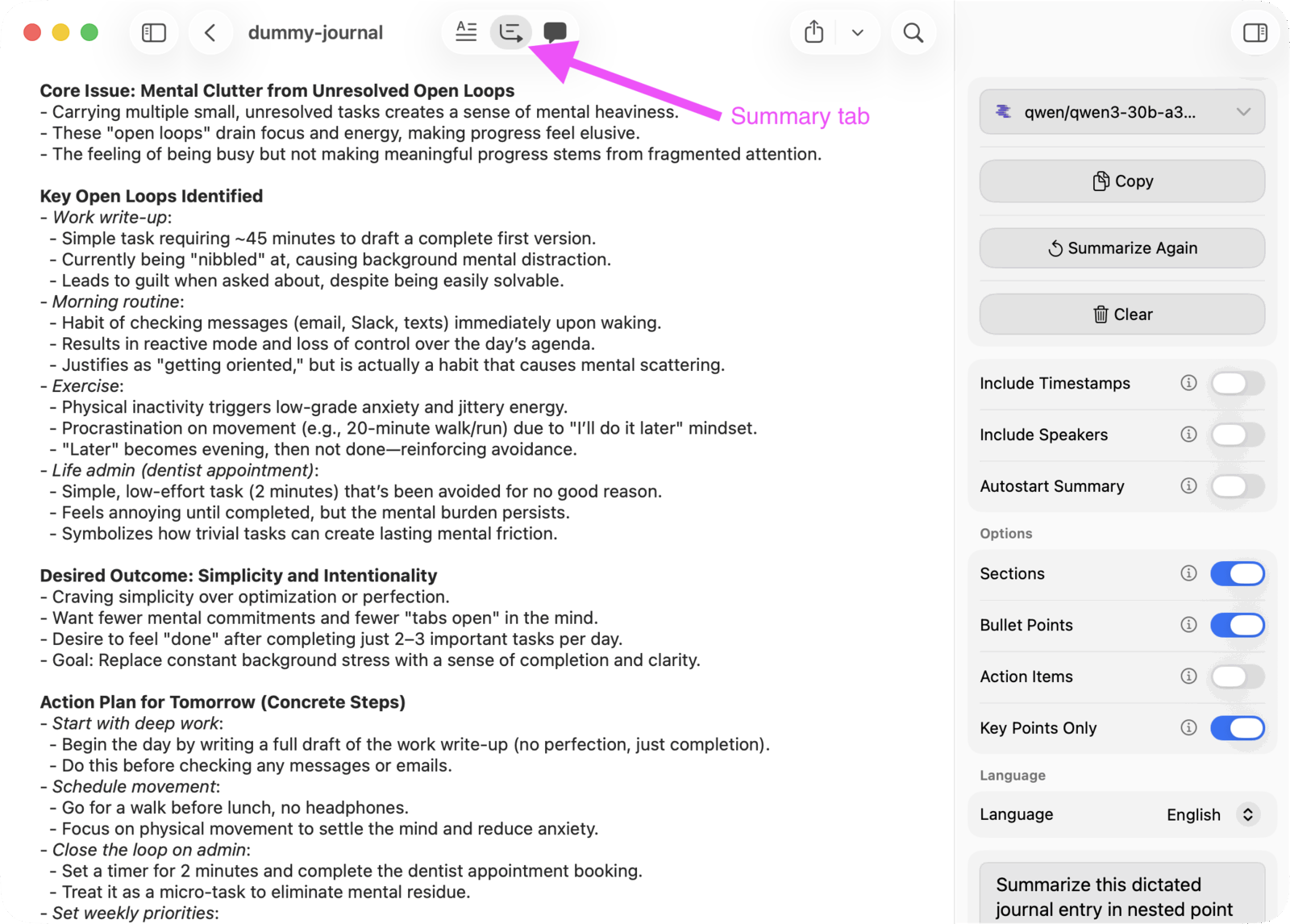

MacWhisper can generate an AI summary of the transcript. You can configure:

- the prompt

- which LLM to use

In my setup, I run LM Studio locally, load a Qwen mixture-of-experts model, expose it as a local network service, and then point MacWhisper at it. The choice of model is key: I settled on qwen/qwen3-30b-a3b-2507 after experimenting with a few others because it runs smoothly on my M1 MacBook Pro, shows good understanding of typical journal content, and usually generates a sensible (even insightful) summary.

This is the part that feels magical: in under a minute, I get a structured, concise version of what I just said.

The summary step is doing two important things:

- Condensing: remove fluff, repetition, “ums”, and everything that isn’t essential

- Structuring: turn a wall of transcript into bullet points I can scan and reflect on

It’s much easier to think about what I said when it’s in a tight outline; often the distilled outline format is itself enough to trigger some new insights when I first read it. And it’s far more useful when I’m looking back in the future, because I don’t want to re-read a giant transcript to remember what was going on.

Here’s the prompt I use:

Summarize this dictated journal entry in nested point form format. Find the most salient themes in the content and use those as top-level points. Then fill out sub-points, sub-sub points, etc. up to 3 levels of nesting. Each point should be concise and omit filler words.

Step 4: copy the summary back into the original note

Finally, I copy the generated summary and paste it into the top of the original Apple Notes note (the one that contains the recording).

That’s it. The raw recording is there if I ever want it, but the thing I actually read later is the summary.

Privacy and tradeoffs

The obvious question with “AI journaling” is privacy. I trust Apple with data storage, but keep the rest to local tools only:

- the note + audio live in Apple Notes (synced via iCloud with Advanced Data Protection)

- transcription and summarization happen on my Mac

- the LLM is served locally via LM Studio (no external API calls)

This isn’t “perfect security”, but it’s a set of tradeoffs I’m comfortable with: high usefulness, low friction, and no raw journal transcript being shipped to a third-party LLM service.

Try it!

This workflow feels like journaling with a power tool: I can speak naturally, then get back a clean, structured artifact that’s great for later reflection.

If you already journal, try dictation. If you don’t journal because it feels like too much work, optimizing for low friction is the best trick I know.

Daniel P Gross

Daniel P Gross